AI Cost Optimization: Proven FinOps Strategies to Control Your AI Infrastructure Costs

Table of Contents

Optimizing AI costs has become a crucial challenge for organizations as AI adoption accelerates. Indeed, over 90% of CloudZero customers are now tracking their AI-related spending within their platform. However, the financial implications are significant—setting up a data center in the United States can cost up to $11.6 million per megawatt of IT load, while a single NVIDIA H100 GPU carries a price tag of approximately $40,000.

With this in mind, implementing FinOps for AI helps organizations forecast costs more accurately and make data-driven decisions about AI spending. We’ve seen remarkable results from effective AWS cost optimization techniques, including over $1 million in immediate savings through optimized inference workloads and token caching, as well as reductions of more than 50% in compute costs. For instance, using Managed Spot Training in Amazon SageMaker can reduce training costs by up to 90% compared to On-Demand instances. After implementing these strategies systematically, organizations can transform from facing threatening bills to establishing manageable, predictable cost structures.

In this article, we’ll explore proven strategies to optimize your AI infrastructure costs without sacrificing innovation or performance. From understanding unique AI cost drivers to implementing advanced FinOps practices at scale, we’ll provide actionable insights to help you balance innovation with financial sustainability.

Understanding AI Cost Drivers

AI technologies are fundamentally transforming businesses across sectors, yet they come with substantially higher price tags than conventional computing. Consequently, understanding what drives these costs is essential for implementing effective optimization strategies.

Why AI workloads are more expensive than traditional cloud tasks

The financial demands of AI workloads dramatically exceed those of standard cloud applications. According to recent studies, AI queries typically require up to 10 times more processing power than traditional workloads. This disparity becomes even more apparent when comparing resource requirements:

- Natural language processing tasks consume approximately 100GB of data and 10 GFLOPS per hour

- Computer vision operations need around 10GB of data and 100 GFLOPS per hour

- Machine learning tasks demand up to 1TB of data and 1,000 GFLOPS of processing power

In contrast, traditional cloud workloads like web hosting or databases typically require just 1GB of data and 1 GFLOPS per hour.

The financial impact of these resource demands is substantial. Organizations are now spending an average of 30% more on cloud services compared to last year, primarily due to AI and generative AI implementations. Furthermore, 72% of FinOps practitioners report that GenAI spending is becoming increasingly difficult to manage.

This cost escalation shows no signs of slowing down. Experts project that computing costs will climb by approximately 89% between 2023 and 2025, with 70% of executives identifying generative AI as the primary driver behind this increase.

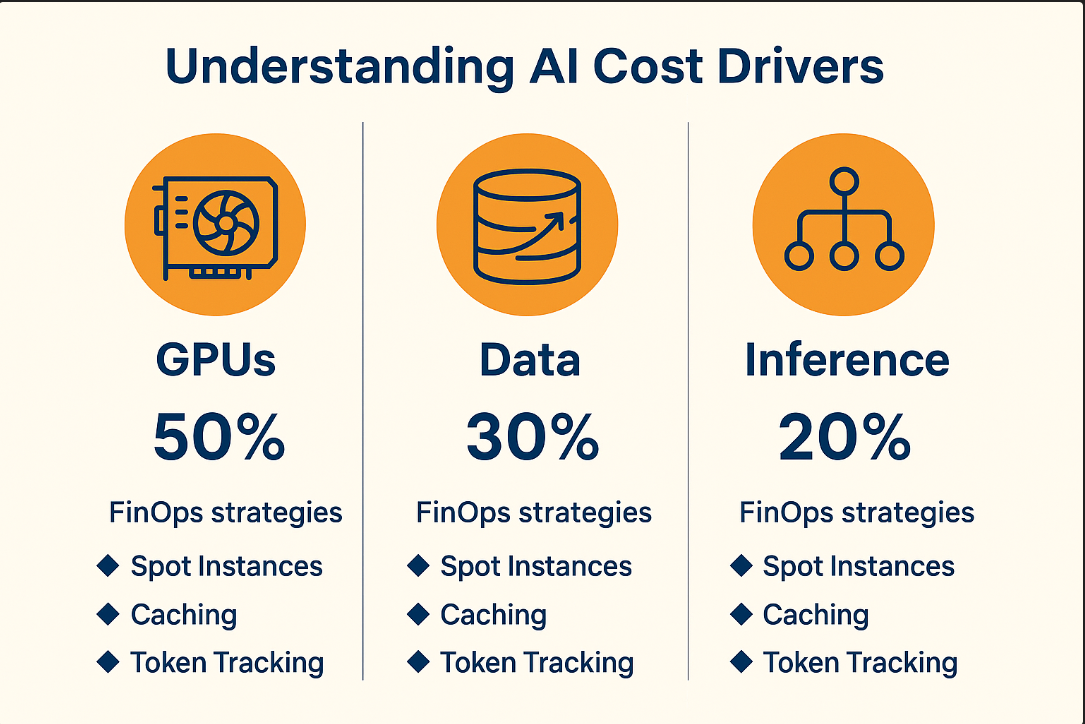

The role of GPUs, data, and inference in cost escalation

Three key components primarily drive AI costs: GPUs, data management, and inference operations.

First and foremost, specialized hardware requirements represent a significant expense. A single high-performance NVIDIA GPU can cost approximately $30,000, creating significant upfront investment needs. Additionally, the global scarcity of GPUs has created a volatile infrastructure market with fluctuating prices.

Data-related expenses form the second major cost category. Beyond storage costs, organizations must account for data acquisition, cleaning, and preparation expenses. Small files can trigger excessive API calls, each incurring micro-charges that accumulate rapidly. Notably, egress fees for moving data between environments often create unexpected cost spikes, especially for distributed training operations.

Inference costs constitute the third and frequently underestimated component. For most companies using AI, the ongoing expense of running models (inference) accounts for 80-90% of total compute costs over a model’s production lifecycle. Given that each prompt generates tokens with associated computational costs, inference expenses grow proportionally with usage volume. These costs continue rising due to wider business adoption, increasing model complexity, and growing data processing requirements.

How FinOps for AI differs from traditional FinOps

Traditional FinOps practices require significant adaptation to address AI’s unique financial challenges. The probabilistic nature of GenAI fundamentally differs from deterministic cloud operations—the same prompt can produce various outputs with differing lengths and costs. This unpredictability makes accurate cost forecasting substantially more challenging.

In contrast to traditional cloud resources measured in clear units like vCPU-hours or GB-months, AI costs typically revolve around “tokens,” a unit whose definition and count vary dramatically between models. This inconsistency complicates budgeting and comparison between services.

Beyond that, AI systems demonstrate extreme sensitivity to minor changes. Moving a comma in a prompt or switching model versions can significantly affect response lengths and costs. Even failures are expensive—models may generate thousands of costly tokens that yield unusable results, requiring new approaches to failure detection and cost control.

Effective FinOps for AI focuses on visibility into spending across GPU instances and API calls, cost optimization through appropriate model selection and resource right-sizing, accurate forecasting to prevent budget overruns, and continuous ROI measurement to ensure AI investments deliver business value.

Model Selection and Customization Strategies

Selecting the appropriate model and customization approach represents one of the most effective ways to manage AI costs without sacrificing quality. Strategic model selection directly impacts your operational expenses and should be a cornerstone of your optimization strategy.

Choosing the right model size for your use case

Smaller models can be surprisingly efficient, offering substantial benefits beyond just lower costs. These lighter models require less computing power, making them faster to deploy and more suitable for devices with limited resources. A gradual approach to model selection often yields the best results—begin with larger models for proof-of-concept work, then systematically reduce model size until performance begins to deteriorate.

Following this methodology allows you to identify the most cost-efficient model that still meets your requirements. Initially, using larger models provides clearer signals about whether an LLM can accomplish your task without spending excessive time on prompt engineering. Thereafter, you can optimize by:

- Writing detailed, concise prompts to guide smaller models

- Implementing few-shot prompting to help models learn from examples

Remember that different tasks demand different model capabilities. For instance, domain-specific tasks may perform better with a properly fine-tuned smaller model rather than a larger, general-purpose one.

When to use fine-tuning vs. retrieval-augmented generation (RAG)

The decision between fine-tuning and RAG significantly impacts both costs and performance. RAG supplements models by connecting them to external data sources without modifying the model itself, whereas fine-tuning actually adjusts the model’s parameters through additional training.

RAG excels at handling frequently updated information, pulling real-time data directly from your sources. This approach maintains data freshness without requiring model retraining. Conversely, fine-tuning embeds knowledge directly into the model, making it particularly effective for specialized tasks requiring consistent output formats.

From a cost perspective, the calculation changes based on usage volume. RAG typically has lower upfront costs but may become more expensive at scale due to increased token usage with every query. Each RAG request includes substantial context information, potentially inflating token counts by hundreds per call. Fine-tuning initially requires more investment in data preparation and training resources but can prove more economical for high-volume applications by reducing per-query token count and latency.

Prompt engineering to reduce token usage and cost

Since tokens represent the basic billing unit for most LLM services, optimizing prompts can directly reduce expenses. Even modest improvements in token efficiency create significant savings at scale—some organizations have achieved token reductions of 3-10% without compromising output quality.

Effective token optimization strategies include:

- Crafting clear, concise instructions that eliminate unnecessary details

- Using strategic abbreviations and acronyms where appropriate

- Removing redundant words and information from prompts

- Chunking complex information semantically rather than arbitrarily

Consider this practical example: changing a verbose prompt like “Please provide a detailed summary of the customer’s purchase history, including all items purchased, dates of purchase, and total amount spent” (25 tokens, $0.03) to simply “Summarize the customer’s purchase history” (7 tokens, $0.01) can reduce costs by over 60%.

Overall, thoughtful model selection coupled with strategic customization represents a critical opportunity to balance performance with financial sustainability.

Optimizing Training and Inference Workloads

Training and inference workloads represent major cost centers in AI operations. By implementing strategic optimization techniques, you can dramatically reduce expenses without compromising model performance.

Use of spot instances and auto-scaling

Spot instances offer a powerful cost reduction strategy, providing discounts of up to 90% compared to On-Demand pricing. These instances leverage unused cloud capacity, making them ideal for fault-tolerant AI workloads. The NFL exemplifies this approach, saving $2 million per season by utilizing 4,000 EC2 Spot Instances across more than 20 instance types.

Effective auto-scaling complements spot instances by dynamically adjusting resources based on demand. Modern auto-scaling solutions can scale down to zero during idle periods, eliminating unnecessary expenses. Moreover, advanced auto-scaling technologies can spin up new instances within seconds rather than minutes, ensuring optimal resource utilization without performance degradation.

Offloading inference to client devices

On-device inference presents another significant optimization opportunity. This approach shifts computational work to end-user devices, delivering multiple benefits:

- Reduced latency through local processing

- Enhanced privacy by keeping sensitive data on-device

- Lower operational costs by decreasing cloud compute usage

Intelligent decision engines can determine when to process locally versus remotely based on network conditions, device capabilities, and execution time requirements. These systems achieve up to 81.33% accuracy in identifying optimal processing locations.

Caching and batching to reduce repeated compute

Implementing strategic caching mechanisms substantially reduces unnecessary computation. For LLMs, KV caching stores previous key and value tensors in GPU memory, preventing redundant calculations during token generation. PagedAttention further optimizes this process by enabling noncontiguous memory storage, significantly reducing wastage.

Batching inference requests likewise improves throughput by spreading the memory cost of weights across multiple operations. Beyond traditional static batching, in-flight batching immediately replaces completed sequences with new requests, maximizing GPU utilization.

Serverless and asynchronous inference options

Serverless inference eliminates infrastructure management entirely, automatically handling scaling and resource provisioning. Its pay-per-use model proves especially cost-effective for workloads with variable or unpredictable traffic patterns.

Asynchronous inference further optimizes costs by queuing requests and processing them efficiently. This approach excels for non-real-time applications like batch processing, enabling better resource utilization without latency constraints. For predictable bursts in traffic, provisioned concurrency maintains warm endpoints that respond within milliseconds while preserving the cost benefits of serverless models.

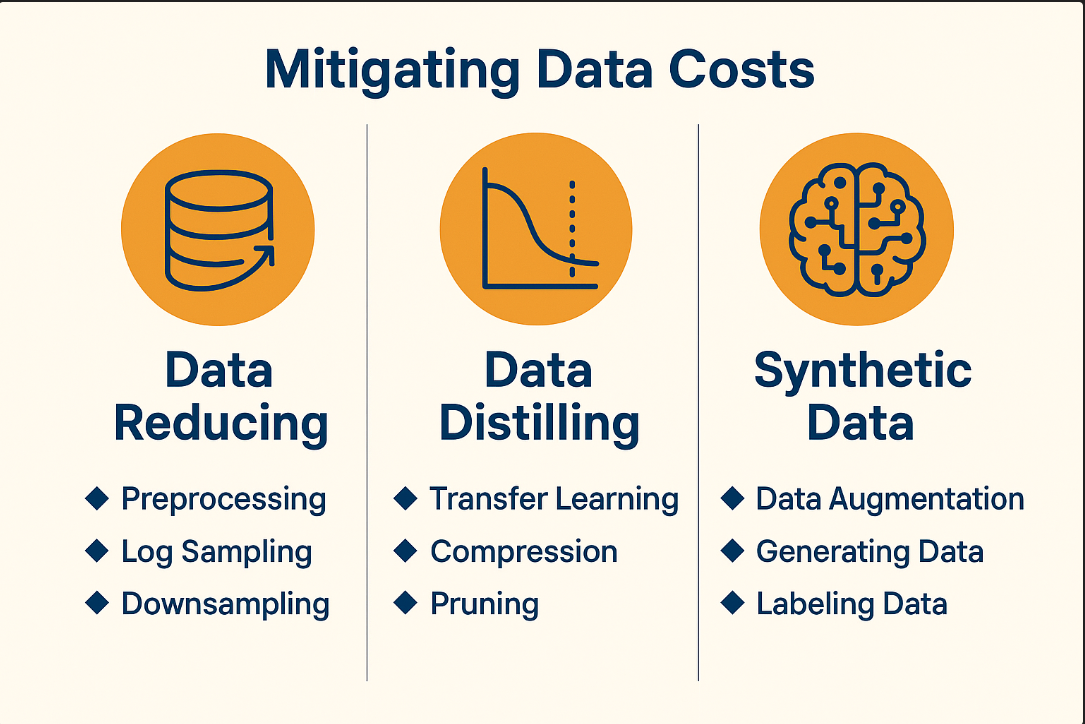

Data and Storage Cost Management

Data management represents a critical yet often overlooked dimension of AI cost optimization. Effective strategies in this area can substantially reduce expenses while improving model performance.

Cleaning and curating training data

Data curation forms the backbone of successful AI implementations. This systematic process of selecting and structuring data dramatically improves its value for AI usage. Poorly curated datasets lead to several costly outcomes, including higher computational expenses and extended training times.

Most importantly, thoughtful data curation directly impacts your bottom line. Large, noisy datasets demand more computation and longer training periods, whereas proper filtering leads to both cost savings and decreased training time. Beyond immediate savings, high-quality data curation enables reaching performance milestones more efficiently—improving accuracy from 90% to 95% typically requires precisely collected data rather than simply more data.

Using intelligent storage tiers (e.g., S3 Intelligent-Tiering)

Intelligent storage solutions offer substantial cost benefits for AI workloads. Amazon S3 Intelligent-Tiering automatically moves data between access tiers based on changing usage patterns, saving users over $4 billion in storage costs since its 2018 launch.

This approach works by monitoring access patterns and automatically shifting objects that haven’t been accessed for 30 consecutive days to the Infrequent Access tier (40% savings), then to the Archive Instant Access tier after 90 days (68% savings). For rarely accessed data, optional Archive Access and Deep Archive Access tiers can reduce storage costs by up to 95%.

Reducing data transfer and duplication

Data transfer expenses frequently create unexpected cost spikes in AI operations, especially during distributed training. To mitigate these costs, firstly, utilize tiered storage—keeping frequently accessed data in hot storage while moving older data to cold storage.

Secondly, minimize egress costs by containing AI processing within the same cloud region. Consider using content delivery networks or caching mechanisms for inference data to significantly reduce transfer expenses.

Data duplication presents another challenge, with costs extending beyond storage to slower processing and frustrating workflows. As data volumes grow, duplication issues intensify, affecting AI model training accuracy. Machine learning-powered deduplication offers an effective solution, identifying and removing duplicates even when records aren’t exact matches.

FinOps for AI at Scale

Scaling FinOps practices for AI requires sophisticated systems that provide comprehensive visibility and control across complex environments. As enterprises embrace generative AI, they face increasing challenges in managing costs across multiple projects and business lines.

Real-time cost visibility and token-level tracking

Effective AI cost management demands granular visibility—down to individual API calls and token usage. Without tracking input and output tokens per endpoint or tagging model calls by feature, teams operate blindly. Modern platforms enable token-level cost intelligence, providing precise insights into expenses for each model, feature, or experiment.

Cost allocation by model, region, and team

Implementing robust tagging strategies is essential for organizing AI resources by projects, teams, or specific workloads. Custom tags such as project_id, cost_center, model_version, and environment improve cost transparency. This granular allocation allows organizations to assign AI costs to relevant departments through chargeback systems.

Forecasting and anomaly detection with FinOps tools

AI-powered forecasting tools analyze historical spending patterns to predict future costs. Simultaneously, anomaly detection systems automatically identify unusual spending patterns that deviate from forecasts. These tools can detect same-day anomalies without requiring complex setup.

Aligning engineering and finance teams

Despite common strategic thinking, only 21% of CFOs plan to work exclusively with in-house technology teams to adopt AI. Creating alignment through shared metrics and regular communication helps both teams speak the same language.

Conclusion

As organizations rapidly adopt AI technologies, managing their substantial costs becomes essential for long-term success. Indeed, without proper optimization strategies, AI expenses can quickly spiral out of control, threatening innovation budgets and ROI expectations. Throughout this article, we’ve explored how FinOps principles specifically tailored for AI workloads can transform threatening bills into predictable cost structures.

First and foremost, understanding AI cost drivers provides the foundation for effective optimization. The unique nature of AI expenses—driven by specialized hardware, data management, and inference operations—requires approaches that go beyond traditional FinOps practices. Accordingly, strategic model selection represents one of your most powerful cost-saving opportunities. Starting with larger models for proof-of-concept work, then systematically reducing size until finding the optimal balance between performance and cost delivers significant savings.

Beyond model selection, workload optimization techniques such as spot instances, caching, and batching can reduce compute costs by more than 50%. Additionally, data management strategies like intelligent storage tiers and deduplication address often-overlooked expenses that accumulate rapidly at scale.

For enterprise-level implementations, comprehensive FinOps systems with token-level tracking and cost allocation capabilities become crucial. These tools provide the visibility needed for accurate forecasting and anomaly detection while facilitating alignment between engineering and finance teams.

The financial sustainability of AI initiatives ultimately depends on balancing innovation with cost consciousness. Rather than viewing FinOps as a constraint, consider it an enabler—allowing your organization to experiment more freely within predictable budgets. After implementing these proven optimization techniques, you’ll not only manage current AI expenses more effectively but also establish the foundation for sustainable scaling as AI capabilities continue to evolve.

Key Takeaways

AI workloads cost significantly more than traditional cloud computing, but strategic FinOps implementation can deliver over 50% cost reductions while maintaining performance and innovation capacity.

- Start small, scale smart: Begin with larger models for proof-of-concept, then systematically reduce model size to find the optimal performance-cost balance for your specific use case.

- Leverage spot instances and caching: Use spot instances for up to 90% savings on training workloads, while implementing KV caching and batching to reduce repeated compute costs.

- Implement token-level tracking: Deploy granular cost visibility systems that track individual API calls and token usage across models, teams, and regions for precise cost allocation.

- Optimize data management: Use intelligent storage tiers like S3 Intelligent-Tiering and eliminate data duplication to reduce storage costs by up to 95% for rarely accessed data.

- Choose RAG vs fine-tuning strategically: RAG works better for frequently updated information with lower upfront costs, while fine-tuning proves more economical for high-volume, specialized applications.

The key to sustainable AI adoption lies in treating FinOps not as a constraint, but as an enabler that allows organizations to experiment more freely within predictable budgets while building scalable cost management foundations.

FAQs

Q1. What are some effective strategies for optimizing AI costs?

Key strategies include choosing the right model size, using spot instances for training, implementing caching and batching for inference, leveraging intelligent storage tiers, and implementing token-level cost tracking. These techniques can lead to significant cost reductions without compromising performance.

Q2. How does FinOps for AI differ from traditional FinOps?

FinOps for AI deals with unique challenges such as the probabilistic nature of AI outputs, token-based billing, and extreme sensitivity to minor changes. It requires specialized approaches for cost visibility, forecasting, and optimization that go beyond traditional cloud resource management.

Q3. When should I use fine-tuning versus retrieval-augmented generation (RAG)?

RAG is better for handling frequently updated information with lower upfront costs, while fine-tuning is more economical for high-volume, specialized applications. The choice depends on your specific use case, data freshness requirements, and expected query volume.

Q4. How can I reduce data-related costs in AI operations?

To reduce data costs, focus on cleaning and curating training data, use intelligent storage tiers like S3 Intelligent-Tiering, minimize data transfer between regions, and implement deduplication strategies. These approaches can significantly lower storage and processing expenses.

Q5. What role does prompt engineering play in AI cost optimization?

Prompt engineering is crucial for reducing token usage and associated costs. By crafting clear, concise instructions and eliminating unnecessary details, you can achieve token reductions of 3-10% without compromising output quality, leading to significant savings at scale.

Further Reading

- Amazon S3 Intelligent-Tiering: Real-world savings & deep archive options

- FinOps for AI: Practices, capabilities & governance

- Serverless Inference in SageMaker: Scale to zero for spiky traffic

- Microsoft on AI inference’s outsized share of lifecycle footprint

- NFL case study: ~$2M saved per season with EC2 Spot

Ready to take control of your cloud costs?

Book a Free CostQ Demo Today →

See how much you can save.